Research Projects

We realize energy-efficient computing systems in the hardware and algorithm co-design fashion. Our vision of end-to-end hardware-software convergence includes research projects that: (1) simulate, fabricate, and characterize novel electronic devices that natively possess functions directly applicable to AI applications in their dynamics, (2) develop device physics-enabled algorithms for brain-inspired neuromorphic computing systems and AI accelerators, and (3) Integrate the devices and algorithms with customized circuits and architecture in a chip.

Maximizing each counterpart's capability, the co-design manner will meet an urgent demand for energy-efficiency deteriorated by ever-growing data size and algorithmic complexity for better AI while achieving high performance. Smartly leveraging device dynamics will eliminate unnecessary costs arising from digital-processing of analog and non-linear functions that are natural to human but expensive to digital computers. Inspiration from the brain grants abilities to learn and perform intelligent tasks better and more efficiently in a biological manner to build intelligent systems, without massive astronomical training and inference costs. All the elements can collaboratively accomplish the dream of true “Artificial Intelligence” that thinks, learns, and operates as the name means, beyond the current stage of “machine intelligence”.

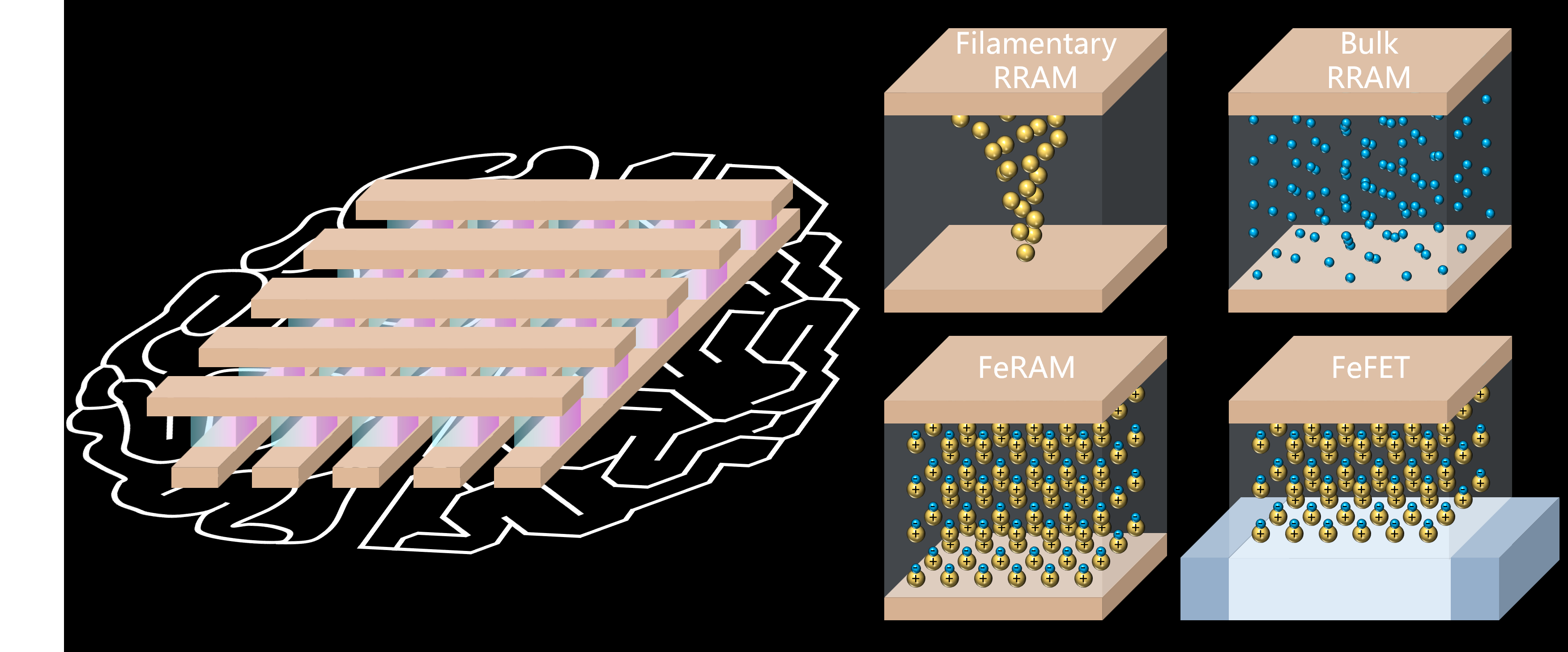

Compute-in-Memory with emerging memories

The way to solve problems and execute tasks of the human has been revolutionized by the emergence of AI. However, the demand for the better has made it more complicated and power-consuming with the ever-growing amount of information. To accelerate the process while reducing energy consumption, brain-inspired computing has been developed. Compute-in-memory (CiM) is one of the themes and has shown promise through accelerating multiply-and-accumulate (MAC) operations. It eliminates the data movement between computing and memory units that are segregated in Von-Neumann architecture, leading to faster and more energy-efficient operations. We co-design hardware and algorithms for CiM-based computing systems, such as AI accelerators and Ising Machines, with novel memory technologies, such as resistive random-access memory (RRAM) and Selector Only Memory (SOM). As a part of Co-design, CMOS circuits and computer architecture are customized to make such memories work properly according to the purpose.

Related PapersTAXI: Traveling Salesman Problem Accelerator with X-bar-based Ising Macros Powered by SOT-MRAMs and Hierarchical Clustering , Design Automation Conference, 2025

Perspective: Entropy-Stabilized Oxide Memristors , Applied Physics Letters, 2024

Columnar Learning Networks for Multisensory Spatiotemporal Learning, Advanced Intelligent Systems 4, 11 (2022)

RM-NTT: An RRAM-Based Compute-in-Memory Number Theoretic Transform Accelerator, IEEE JXCDC 8, 2 (2022)